Why every metric eventually breaks down

Every advertiser has a favorite signal. For example, viewability, attention, fraud scores, and “premium” lists all promise clarity in a system built on uncertainty. But none tell the full story.

For years, these inferred metrics have served as stand-ins for quality. Viewability tells buyers that an ad appeared on-screen, brand safety tools label content as safe or unsafe, and attention scores claim to quantify engagement. Yet all of them rely on indirect signals, or assumptions drawn from partial data rather than direct observation of what’s on the page.

As a result, what began as an effort to measure quality has turned into a reliance on proxies. Viewability tends to show whether a player appeared on screen, not whether anyone watched. Attention models regularly estimate engagement while ignoring the noise of autoplay. And “premium” lists often reward reputation, not actual performance.

In theory, these signals should align. In practice, however, they often conflict – and when they do, buyers are left guessing. That’s because most of these tools infer quality from limited data instead of observing what’s actually happening on the page.

That’s what DeepSee.io recently set out to test. We wanted to see what happens when you stop trusting secondhand signals and start looking at the web itself. By observing how video ads actually load, play, and capture attention, we uncovered how observed data turns that ground truth into something advertisers and publishers can finally act on.

What the observed web shows about instream video quality

Video advertising was meant to be the most measurable format in digital. Instead, it has become one of the most misrepresented.

Take “instream.” The term has become shorthand for safe, high-quality inventory, yet verification systems rarely confirm what appears on the page. They rely on sampled tags and post-bid analysis that miss what users actually see. This leads to autoplay boxes and mislabeled placements counted as true instream impressions.

On paper, metrics like these look clean. But in practice, human attention is nowhere to be found.

To better understand these imperfect signals, DeepSee.io analyzed more than 60,000 websites declaring instream video. Our findings show just how quickly inferred signals collapse once you observe concrete behavior.

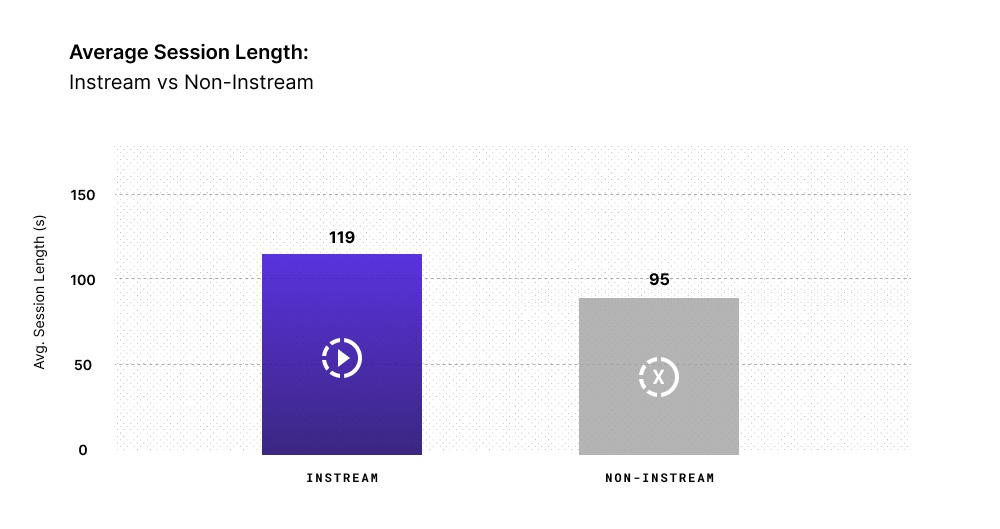

Across the dataset, pages that declare instream video behave very differently from those that don’t:

- Users stay 13% longer and open with sound more often.

- Risk rises from 0.03% in the top 10,000 domains to 27% beyond one million, but verified long-tail publishers maintain strong engagement when ownership and structure are clean.

- Smaller, verified sites often outperform the biggest “premium” domains in both efficiency and attention.

- Clean, single-player pages typically clear at comparatively lower CPMs and deliver focused, human attention.

- Cluttered pages that load multiple autoplay players inflate auctions and divide focus. Rank predicts popularity, not performance.

These findings reveal the limits of inferred signals. Viewability and safety scores might suggest quality, but only direct observation exposes how a page actually behaves.

Why observed data is more valuable than perfect signals

Like instream signals, other inferred metrics eventually break because they make the thing they’re trying to measure abstract and inaccurate. For example:

- Viewability measures pixels, not people

- Brand safety measures reputation, not on-page integrity

- Attention models measure probability, not human experience

The more these signals are optimized, the further they drift from truth; none are a perfect signal because none see the full picture.

That’s where observation comes in. By crawling and classifying every page where ads appear, DeepSee.io records how video inventory actually behaves: how many players load, how they play, and who owns them. Doing so enables us to turn the guesswork of verification into verifiable facts.

How advertisers can use observed data (and how everyone else benefits)

Observed data like this gives advertisers the power to fund quality they can prove. This includes:

- Use page-level insight to understand player count, playback type, and ownership

- Bid to behavior, not reputation

- Balance premium reach with verified long-tail supply for cleaner, more efficient attention

For publishers, this transparency represents a competitive advantage. Clean playback behavior and clear ownership attract stronger demand and higher-quality advertisers.

For the industry as a whole, observation reconnects measurement with what people actually experience – the difference between counting impressions and measuring attention.

Replacing signals with proof

What our instream analysis proves is that every signal eventually breaks down under scrutiny. That’s because each metric, no matter how sophisticated, simplifies what it cannot see.

For example, even the safest, most “premium” inventory hides inefficiencies that only page-level inspection can reveal. Observation, not inference, is the new standard for quality.

DeepSee.io makes that standard possible. Our platform verifies instream placements, identifies legitimate publishers across the open web, and exposes where true audience engagement lives.

Because the observed web isn’t theoretical. It’s measurable, visible, and available today.

Download our full report, Optimizing for Video Quality Isn’t as Simple as Buying the Biggest Sites, or contact our team to learn how DeepSee.io can help you replace signals with proof.