Traffic laundering is an exploitative practice wherein a middleman takes visitors from one site, and uses them to create visits to other sites who have paid for human traffic.

Assuming you’ve been on the web for a while, you’ve probably experienced the following: you visit a website, but a popup is created when you interact with any of the content on the page; you probably closed the offending window and moved on, but the damage is done.

During that visit, you just made a traffic salesman’s day, and created a valuable human visit for a thirsty publisher. You are really the big loser in this situation, as well as the advertisers who paid for space in the popped-up page.

Launderers of traffic love to work with fringe sites with tons of real users, like: illegal movie / tv streaming sites, torrent & warez sites, and other such sites that have a hard time monetizing.

According to ad fraud researcher Dr. Augustine Fou: “Popunders are spawned from piracy and porn sites when users click the play button, or what was disguised as the play button; it is a user initiated action which creates the popunder (which the user cannot see).

Content and other ads load into the popunder as long as they are on the page, and all of it registers as “valid” traffic since it is on a real device and real browser (except the human wasn’t aware it was happening in the background).” He also elaborates further on this topic in his article “Ad-Supported Piracy Is Thriving, Thanks To Programmatic Ad Tech.”

The scheme works, because IVT trackers struggle to detect traffic generated this way. The laundering process removes any trace of suspicious referral headers, and could only be detected by traditional impression tracking if the vendor had trackers at each end of the user’s journey.

The problem is: the publishers who allow laundering to happen aren’t the kind of reputable chaps who employ IVT tracking tech, and the middlemen surely aren’t going to expose themselves (if they can help it).

Traffic Laundering Funds Publishers that Advertisers Would Rather Not Support

There’s no getting around it: there is a lot of unpleasant and polarizing content out there, especially this year.

More than ever, advertisers have invested in brand safety solutions which suppress their ads from loading next to content that does not align with the brand’s values.

Nandini Jammi & Claire Atkin, co-founders of Check My Ads, describe the goals of brand safety monitoring in their recent newsletter: “Brand safety is not about a page-by-page analysis. No social media crisis will come from an awkward ad placement. What will create a brand safety crisis, though, is funding organizations that peddle dangerous rhetoric (hate speech, conspiracy theories, dangerous disinformation). “

Even in the best case scenario, where there are no false positives with this brand safety tech, hate and disinformation outlets can easily still make money by selling their human traffic to middlemen who will launder it for them.

In this article, we offer a concrete example of where this is occurring today.

Divisive Content Thrives in Tumultuous Times

As part of DeepSee’s monitoring of the web, we check for the articles that “haters” tend to link to.

To be more precise, we take search terms sourced from hatebase.org (the world’s largest structured repository of regionalized, multilingual hate speech), and run those through the Twitter search API to find the most linked-to domains.

This analysis is directionally helpful in determining publishers who have highly editorialized and polarizing content, and it also helps us find publishers who might get creative when it comes to monetization.

Around August 21st we saw a lot of posts linking to an article from therightscoop.com titled: “WATCH: Despicable feminazi thugs attack mother and 7-yr-old son outside DNC for supporting Trump.”

This site takes advantage of hidden pop-unders, which are triggered when you click any link on their site. Clicking any link will send you to the brand safe site techandgeek.com, which has PLENTY of advertisements for high paying brands.

In just one visit, we saw ads for Fortnite, BMW, Dasani, Petco, and more; check out the video below to see the whole user journey (best viewed in HD).

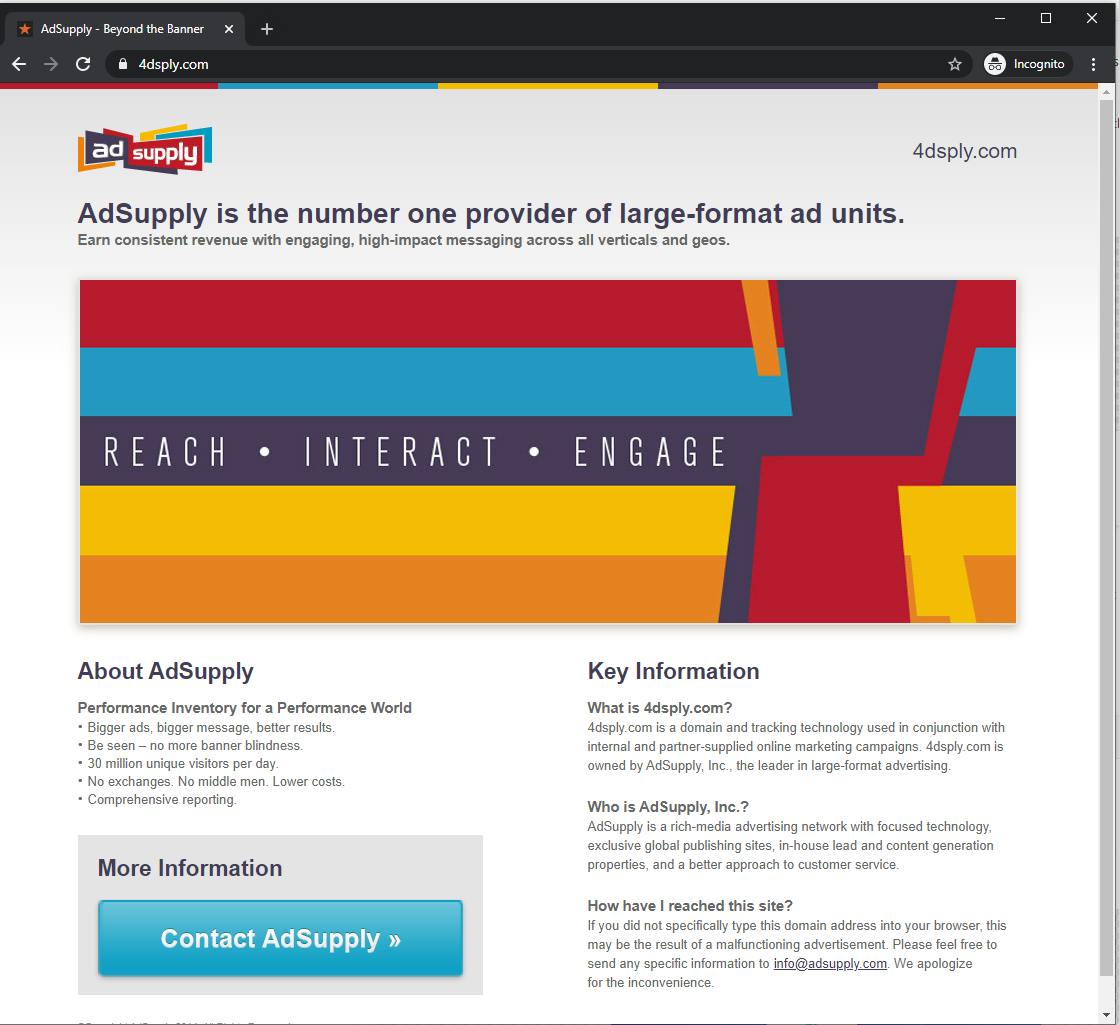

This laundering code belongs to AdSupply, who is one of the most observed middlemen taking part in traffic laundering (despite not advertising the service on their website). The popups and redirects are triggered by their scripts hosted at engine.4dsply.com, which is certainly claimed by AdSupply:

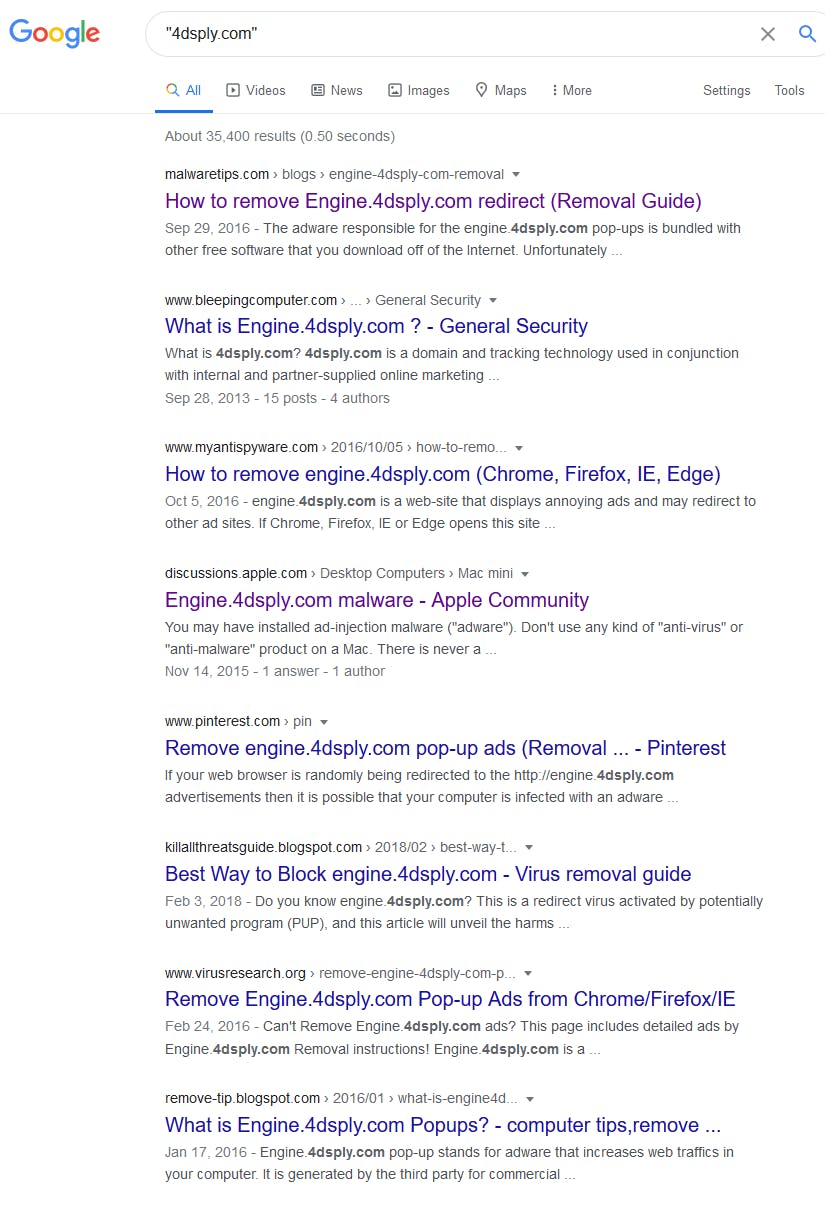

Google results for “4dsply.com” are basically just people asking how to remove malware / spyware:

When this happens, therightscoop.com makes money from AdSupply, and AdSupply makes even more money by helping techandgeek.com fulfill their goals of bringing real humans to the site.

The video even shows that techandgeek is aware when traffic is paid & from AdSupply, but they take advantage of internal redirects to hide any relevant UTM values that 3rd party vendors could use to find out about the scheme.

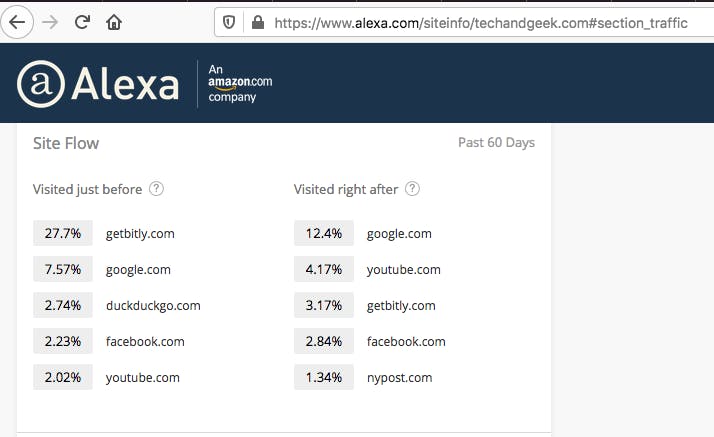

Nevertheless, we can approximate how much of techangeek.com’s traffic arrives this way using tools like Alexa:

Using what we know about this scheme we can deduce the following:

- We know getbitly.com & duckduckgo.com are used by AdSupply to hide the true source of traffic

- 30% of inbound traffic to techandgeek.com comes from those 2 sources

- It’s could be that 30% of the visits to techandgeek.com are sourced from AdSupply or other laundering partners

It’s not likely that all the visits come from therightscoop.com; AdSupply has a network with thousands of sites which serve as starting points for the laundering process. In the past 30 days, we observed the following sites loading scripts from “engine.4dsply.com.” According to our research, at least 30% of sites with engine.4dsply.com scripts create pop-ups, making it an outlier among ad-tech.

Not all of these sites send traffic to techandgeek.com; this is just one example which shows how easy it is to profit from hate and disinformation.

The traffic destinations change depending on the campaigns that AdSupply needs to fulfill, so it’s liable to rotate often.

Conclusions and Next Steps

- Even in the best case scenario, where there are no false positives with this brand safety tech, hate and disinformation outlets can easily still make money by selling their human traffic to middlemen who will launder it for them.

- The only way to make this unprofitable, is to make buying laundered traffic less attractive. One of DeepSee’s purposes is to provide both the origin, AND the destination of such laundering events, allowing advertisers to hold each entity in the laundering process accountable.

- To get an idea of who else is selling popup/popunder traffic, try google searching “buy pop traffic”. If you have a department who vets new publisher partners, make sure part of the onboarding process includes traffic source analysis.