Over the past year, the economics of open-web publishing have undergone a seismic shift. This is largely thanks to generative AI, which, by reducing the cost of content creation to near zero, has made it possible to launch and monetize sites at scale and a pace previously difficult to imagine.

In search of a better understanding of how this shift is reshaping programmatic supply, we looked closely at how AI-generated domains enter, scale, and churn across the open web. What emerged was not simply an increase in low-quality inventory, or “AI slop,” but a deeper problem: the signals buyers rely on to judge quality are increasingly misaligned with how this inventory actually behaves.

How AI-generated supply impacts verification

One of the most striking findings from our data was the speed at which AI-generated supply now enters and exits the market. In fact, many AI-driven domains move from registration to monetization in a matter of weeks, delivering the bulk of their impressions early in their lifecycle before disappearing entirely.

What we observed was:

- Domain history has become less informative. Traditional verification approaches rely on time-based indicators such as longevity or accumulated behavior; when a site’s entire lifecycle fits inside a single quarter, those indicators lose much of their value.

- Blocklists are also struggling to keep pace. By the time a low-quality domain is identified and classified, it has often already churned out of existence and been replaced by another site using the same templates and monetization strategies.

- Volume is compounding the problem. Across our data, we noticed growth from hundreds of AI-flagged domains to tens of thousands in a short span, with more than 140 billion impressions tied to this class of supply in a single month.

This has led to an environment where verification systems are constantly reacting to yesterday’s inventory, while new supply continues to move through the ecosystem unchecked.

Why AI slop causes quality signals to fail

Our analysis shows that AI-generated supply does not succeed by breaking rules, as one might assume, but by operating comfortably within them. We found that three recurring patterns can explain why legacy quality signals struggle in this environment.

- Optimization systems reward cost and scale. AI-generated video inventory frequently clears at significantly lower CPMs than cleaner supply. Buying systems interpret these prices as efficiency, directing spend toward environments that are inexpensive to produce rather than durable or credible.

- Brand safety emphasizes compliance, not substance. Because AI slop avoids sensitive topics and unsafe language, many pages pass suitability checks without friction. Compliance becomes a proxy for quality, even when content offers little original insight or editorial depth.

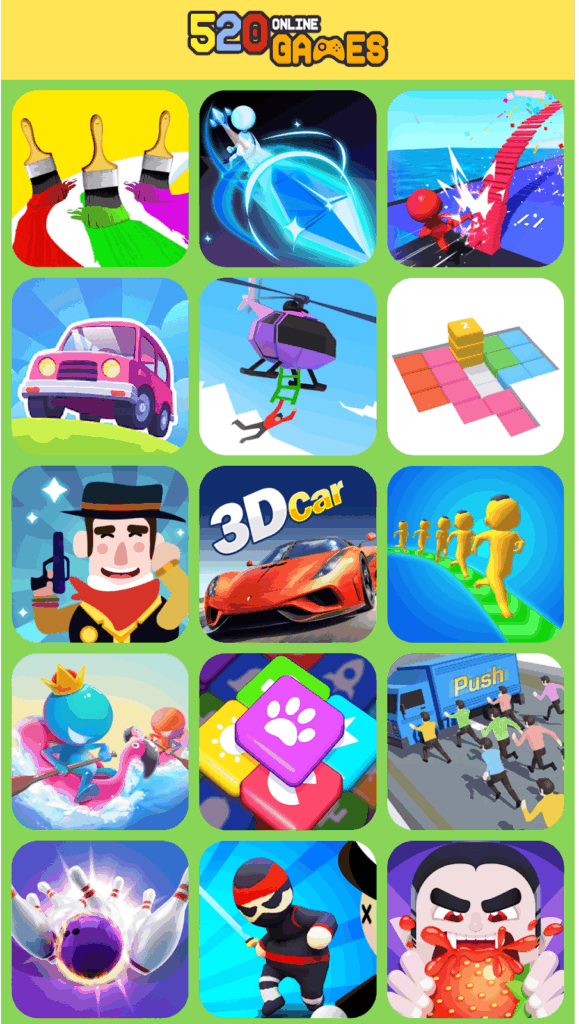

- Engagement can be engineered without intent. Many AI-generated pages are designed to maximize session length and page depth through templated layouts, forced navigation, and repetitive internal links. In categories like Games & Trivia, these sites show long sessions and multiple page views, even when traffic quality is questionable.

cowboygamee.com

sqskj.com

jmh128.com

Template-driven game sites appear as separate properties in reporting, while identical layouts and interaction mechanics inflate engagement metrics without adding audience diversity.

Together, these dynamics explain why synthetic supply can appear attractive to systems optimized for efficiency, even as it quietly erodes value.

When “safe” inventory still undermines trust and performance

Across our analysis, we also discovered that much of this inventory is not unsafe in the conventional sense. Instead, it lacks depth, credibility, and editorial intent.

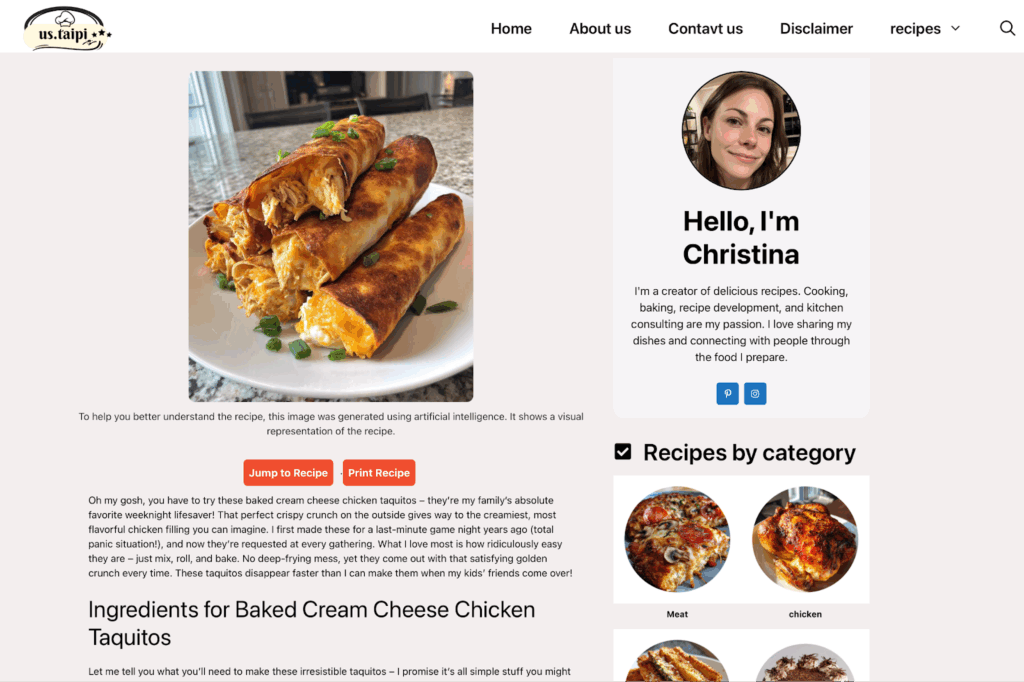

More specifically, we found some sites presenting themselves as editorial properties while simultaneously publishing hundreds of AI-generated pages in incredibly short periods. This pattern is particularly pronounced in high-value categories like Business & Personal Finance and Health & Fitness, where author identities were inconsistent or fabricated, images recycled or AI-generated, and social and editorial signals weak or altogether absent.

taipi.org

This site shows conflicting identity signals: article bylines don’t match the About page, social links are broken, and imagery appears AI-generated. While the layout and content pass basic brand-safety checks, this masks a lack of clear ownership or accountability.

At first, none of this may seem catastrophic; however, over time, polluted supply undermines campaign performance: frequency might increase without deliberate planning, or optimization systems may start learning from synthetic engagement patterns. Legitimate publishers can then appear less efficient by comparison, despite offering stronger environments.

How buyers can evaluate AI-driven supply

These findings mean that buyers must rethink their approach to quality. Rather than rely on inferred scores, here’s how to create a clearer picture of how an environment is operating.

Low effort – fast checks anyone can do

- Suspicious domain names: A lot of AI slop gives itself away in the URL. Misspellings, doubled letters, awkward word mashups, random strings, cheap TLDs. These domains aren’t built to last. They’re built to get approved, monetize, and disappear. If the name feels disposable, it probably is.

- Authorship that doesn’t behave like a real publisher: Many sites show an author, but nothing about it holds up. One name across hundreds of articles, generic bios, “Admin” as the byline, no growth in author count as content explodes – real-life publishers don’t work like that.

- Length without substance: Pages that are massive (i.e., thousands of words, endless images, long “reading time”) but say very little. Padding like this is designed to look comprehensive rather than inform.

Medium effort – page inspection and pattern spotting

- Visual overproduction: AI-driven sites lean hard on images to scale. By early 2026, AI slop domains were using AI-generated images at close to 50%, while clean sites barely moved. When every article is packed with generic, stock-looking visuals or repeated thumbnails, automation is clearly doing the work of an editor.

- Engagement that feels forced: Games and trivia sites often show long sessions and deep paths; however, this is only because users are pushed through “next” buttons and repetitive flows. High engagement isn’t meaningful if every site works the same way.

Higher effort – patterns observable at scale

- The same site wearing different domain names: One of the clearest signals is repetition, be that of layout, navigation, or content ratios. Even if different URLs lead reporting to treat this as diversity, it’s actually a single template copied dozens of times.

- Text that looks generated when you compare it side by side: Individually, pages may look fine. At scale, patterns show up fast: repeated phrasing, predictable structure, lots of words with very little information.

- OSINT-style checks: Tools that compare text across the web or flag reused imagery help surface patterns that you might otherwise miss. A complement to human judgment, these tools help you spot the same tricks faster and more effectively.

Taken together, these approaches provide buyers with a more grounded starting point for evaluating inventory in an environment where synthetic supply increasingly blends in with legitimate content.

Observing the future of the open web

At DeepSee.io, we believe that sustained insights about inventory on the open web require observation. For buyers navigating an ecosystem shaped by generative AI, we offer a level of visibility that supports more durable definitions of quality and helps prevent optimization systems from drifting toward synthetic supply.

Want to learn more about inventory in the age of AI? Download the full AI Slop and the Collapse of Quality Signals in Programmatic report below, or contact us to request a walkthrough of how DeepSee.io can help you evaluate supply across the open web.